With

The Shepard (NS) booster NS-11 landed after the vehicle’s fifth flight on May 2, 2019. Credit: Bali Orig Argin

Some of the most interesting places to study in our solar system are the most roaring environments – but landing on the body of any planet is already a risky proposition. With NASA Planning robotic and crude missions to new locations on the moon Tue.Avoiding landing on a ship’s lawn or in a boulder area is important to help ensure a safe contact with other world surface surfaces. To improve landing safety, NASA is developing and testing a suite of accurate landing and hazard avoidance technologies.

The combination of laser sensors, a camera, a high-speed computer, and intelligent algorithms will give the spacecraft artificial eyes and analytics capabilities to locate a designated landing area, identify potential hazards, and optimize a secure touchdown site. The technologies developed under the Safe and Presided Landing – Integrated Capacity Evolution (SPLES) project within the Space Technology Mission Directorate’s Game Changing Development Program will eventually allow spacecraft to reach rocks, pits and more. The football field is already relatively safe.

A new suite of lunar landing technologies, called Safe and Precious Landing – Integrated Capabilities Evolution (SPLES), will enable safer and more lunar landings than ever before. Future lunar missions could use NASA’s advanced supply algorithms and sensors to target landing sites that were not possible during the Apollo missions, such as hazardous rocks and nearby shadow areas. Supply technologies can also help humans land on Mars. Credit: NASA

During the upcoming mission, three of the four main subsystems of the supply will have their first integrated test flight on the Origin Or Shepherd rocket. As the rocket’s booster returns to Earth, after reaching the boundary between the Earth’s atmosphere and space, SPLICS will run on the regional relative navigation, navigation Doppler leader, and landing and landing computer booster. It will work the same way as each moon approaches the surface.

The fourth major supply component, the risk detection leader, will be tested by future ground and flight tests.

Are following

When searching a site, part of the consideration confirms that there should be enough space for the spacecraft to land. The shape of the field, called the landing ellipse, reflects the unspeakable nature of ancient landing technology. The landing area targeted by Apollo 11 in 1968 was about 11 miles 3 miles, and the astronauts piloted the lander. The next robotic missions to Mars were then designed for autonomous landing. The Vikings landed on the Red Planet 10 years later and had an elliptical target of 174 miles at a distance of 174 miles.

The Apollo 11 landing shown here was elliptical 11 miles 3 miles away. Precision landing technology will greatly reduce the landing area, allowing multiple missions to land in the same area. Credit: NASA

Technology has improved, and later autonomous landing zones have shrunk in size. In 2012, the Uri Rusity Rover Landing was oval 12 to 4 miles below.

Being able to visualize the landing site will allow future missions to target areas in the target areas for new scientific discoveries that were previously considered dangerous for unmanned landing. This will enable advanced supply missions to send cargo and supplies in one place, rather than spread over miles.

Each household body has its own unique conditions. That’s why “Supplies is designed to integrate with any spacecraft landing on a planet or moon,” said Ron Sostaric, project manager. Based on NASA’s Johnson Space Center in Houston, Sostarick said the project has expanded to many of the agency’s centers.

Terrain provides navigation measurements by comparing real-time images with familiar maps of surface features during relative navigation descent. Credit: NASA

“What we’re building is a complete landing and landing system that will work for the moon for future Artemis missions and may be adapted to Mars,” he said. “Our job is to assemble the individual components and make sure it works as a functional system.”

Atmospheric conditions may vary, but the process of landing and landing is the same. The Spilice computer is programmed to enable regional relative navigation several miles above the ground. The on-board camera captures images of the surface, taking 10 images per second. They are constantly fed into the computer, pre-loaded with a database of satellite images and landmarks of the landing area.

Algorithms explore real-time imagery for known features to determine the spacecraft’s position and safely navigate the craft to its expected landing point. It’s like navigating to places like buildings instead of street names.

Similarly, the relative seismic navigation confirms the location of the spacecraft and sends that information to the guidance and control computer, which is responsible for operating the flight path to the surface. The computer will almost certainly know that the spacecraft must be close to its target, such as to place the breadcrumbs and then take them to the final destination.

This process takes place about four miles above the surface.

Laser navigation

Knowing the exact position of the spacecraft is essential to planning a powerful landing for the correct landing and the calculations required to operate it. Between landings, the computer activates the navigation Doppler lidar to measure the velocity and range of measurements, which combines accurate navigation information from field-based navigation. The leader (light detection and ranging) works like a radar but uses light waves instead of radio waves. Three laser beams, each narrow as a pencil, pointed to the ground. Light from these beams bounces off the surface, reflecting the spacecraft.

NASA’s navigation Doppler lidar device consists of a chassis with electro-optic and electronic components, and an optical head with three telescopes. Credit: NASA

The travel time of that reflected light and the length of the wave are used to calculate how far the craft is from the ground, which direction it is heading, and how fast it is moving. This calculation is done for 20 seconds per second for all three laser beams and feeds into the guide computer.

Doppler Leader works successfully on Earth. However, in Hampton, Virginia, technology co-ordinator from NASA’s Langley Research Center and lead investigator Farzin Amzazardian are responsible for resolving challenges for use in space.

“Some people still don’t know how many signals will come from the surface of the moon and Mars,” he said. If the substance on the ground is not very effective, the signal returning to the sensors will be weakened. But Amzgardian believes that the leader will outperform radar technology, as laser frequency commands greater magnitude than radio waves, enabling greater accuracy and more efficient sensing.

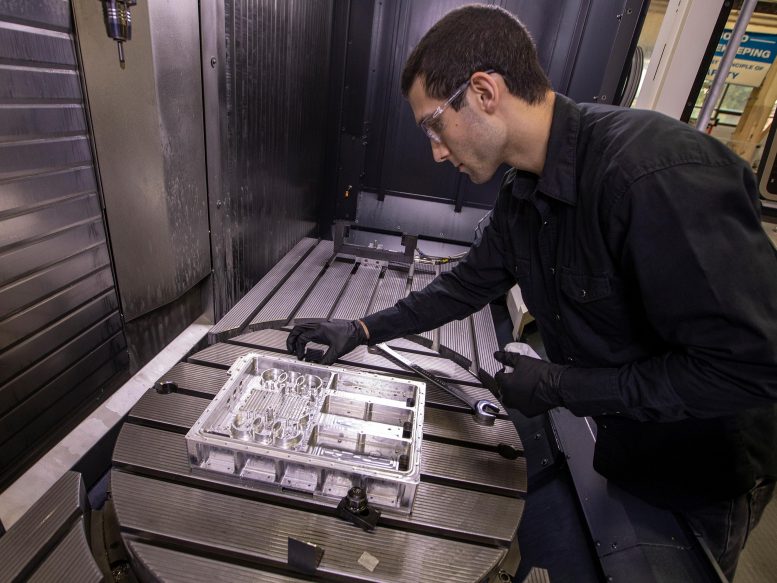

Langley engineer John Savage inspects a portion of the Native Doppler Leader unit after it is constructed from a metal block. Credit: NASA / David C. Bowman

The work horse descent and landing computer is responsible for managing all this data. Navigation data from sensor systems is fed to onboard algorithms, which calculate the new path to land exactly.

Comp. Power

The desktop and landing computer synchronizes the operation and data management of individual SPLICE components. It should integrate seamlessly with other systems on any spacecraft. Therefore, this small computing powerhouse primary flight computer keeps the correct landing technologies from overloading the computer.

Recognition of the Competitive Needs Initiative made it clear that existing computers were not enough. NASA’s high-performance spaceflight computing processor will meet demand, but there are still many years to go. Supplies needed an interim solution to prepare for the first suborbital rocket flight test with Bali Orig Argin on its Shepard rocket. New computer performance data will help shape its final transformation.

The supply hardware is preparing for the vacuum chamber test. Three of the supply’s four main subsystems will have their first integrated test flight on the Blue Argin Ni Shepard rocket. Credit: NASA

John Carson, Technical Integration Manager for Precision Landing, explains that “Surrogate computers have very similar processing technology that informs future high-speed computer design, as well as future fluctuations and landing computer integration efforts. Has been. “

Looking ahead, such test missions will help build safe landing systems for missions by NASA and commercial providers on the surface of lunar and other solar system organizations.

“There are still a lot of challenges to land safely and in the other world,” Carson said. “There is no commercial technology yet that you can go out and buy for it. Every future surface mission can use this precise landing capability, so the NASA meeting that is needed now. And we’re expanding and utilizing transfers with our industry partners. ”