A study links learning to the personality of cells

The way we learn is a question under study by scientists from different disciplines who approach their knowledge from different perspectives.. The people who work around artificial intelligence, the proper construction of its processes and the way it builds knowledge, ensures that we can learn important lessons from our own biology to improve AI. However, any cheap assignment writing service UK will deliver you much more quality work, then AI for now. In this way, the researchers Imperial College of London they found out that variability between brain cells can accelerate learning and improve brain performance and let’s future.

In this way, the researchers Imperial College of London they found out that variability between brain cells can accelerate learning and improve brain performance and let’s future.

New Study found that By adjusting the electrical properties of individual cells in brain network simulationhandjob They learned faster than in simulations with identical cells.

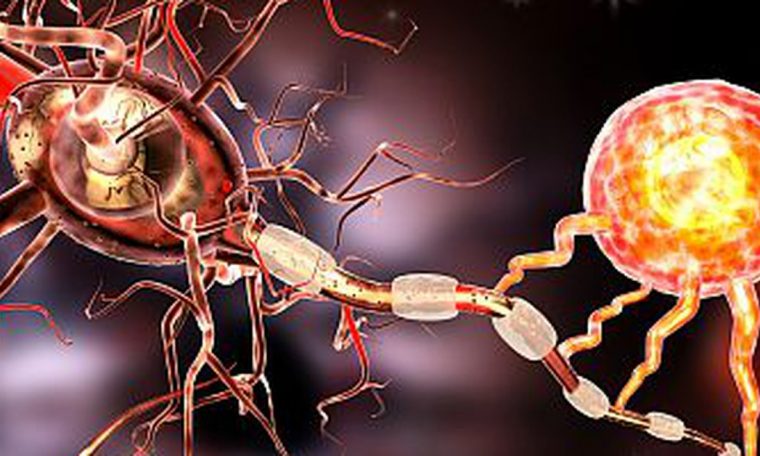

Scientists find that variability between brain cells can accelerate learning and improve brain performance (Getty)

They also found that the network required fewer modified cells to achieve the same results, and that the method consumed less energy than a model containing the same cells.

The authors say their findings could teach us why our brains are so good at learning and could even help us build better artificially intelligent systems, such as digital assistants that can recognize voices and faces, Or autonomous car technology.

The lead author, Nicolas Perez, is a doctoral student in the Department of Electrical and Electronic Engineering of Imperial College London, assured that “the brain needs to be energy efficient as well as be able to excel at solving complex tasks. Our work suggests that the diversity of neurons in both the brain and artificial intelligence meets these needs.” and can inspire learning.”

The authors say their findings could teach us why our brains are so good at learning (Getty)

Snowflakes-like neurons

The brain is made up of billions of cells called neurons, which are connected between them by vast networks that allow us to learn about the world. Neurons are like snowflakes– They look alike from a distance, but on closer look it becomes clear that no two are exactly alike.

In contrast, each cell in an artificial neural network, the technology on which the artificial intelligence is based, is similar, and only its connectivity differs. Despite the speed at which AI technology advances, their neural networks don’t learn as accurately or as fast as the human brain, and researchers wondered whether their lack of cellular variability may be to blame.

They set out to study whether simulating the brain could boost learning in AI by changing the properties of neural network cells.. They found that the variability in the cells improved their learning and reduced energy consumption.

Researchers exemplify that neurons are like snowflakes

Dan Goodman, one of the authors of the document, also from the Department of Electrical and Electronic Engineering of the same unit, stated that “Evolution has given us incredible brain functions, most of which we are just beginning to understand.“

To conduct the study, the researchers focused on adjusting the “time constant”, that is, How quickly each cell decides what it wants to do based on what the cells attached to it are doing. Some will make decisions very quickly, looking only at what the connected neighbors have just done. Others will respond more slowly based on their judgment of what other cells are doing for a period of time.

After transforming the cells’ time constants, experts tasked the network with performing some benchmark machine learning actions: classifying images of clothing and handwritten numbers; Recognize human gestures; and identify spoken numerals and commands.

Despite the speed at which AI technology advances, its neural networks do not learn as accurately or as rapidly as the human brain (Ralwell/iStock/Thinkstock).

The results showed that by allowing the network to combine slow and fast information, it was better able to solve tasks in more complex real-world environments.

When they changed the amount of variability in the simulated networks, they found that the best performers matched the amount of variability observed in the brain., which suggests that it may have evolved to have just the right amount of variability for optimal learning.

“We show that AI can access our brain functioning by simulating certain brain properties. However, current AI systems are far from reaching the level of energy efficiency that we find in biological systems—Perez explains. As a next step, we will see how it is possible to reduce the energy consumption of these networks to bring AI networks to a performance as efficient as the brain”, he concluded.

read on:

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/EMNVFOUWPRFBDAA62RXJJ63VNQ.jpg 420w)

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/KSF3HOQFKNBPPEG6MMGXYS76KE.jpg 420w)

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/DV2TOWJHDVA4ZNLRHVEYJUZL5M.jpg 420w)

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/YVKR4YCEXZECBDO4SZ27APLIWI.jpg 420w)