A chip engraved in 7nm that multiplies the Kunlun I’s performance by two or three, according to Baidu.

Baidu company announced that it begins mass production of the second generation Its Kunlun Processor. The company is also launching a new version of Baidu Brain, Badoo Brain 7.0, for its PaddlePaddle platform, which deserves “One of the world’s largest open AI platforms”.

In the press release it is written that “Kunlun II delivers 2-3 times more processing power than the previous generation”. The chip is engraved on a 7nm node and is built on Baidu’s second-generation XPU architecture. Like its ancestor, it targets AI applications. Baidu mentions areas such as cloud computing, edge computing and autonomous driving. At present, Kunlun I processors are mainly used for Baidu data centers and the Appolong autonomous vehicle platform. With respect to the AI platform, it is currently “Over 3.6 million developers in the world” Who “Developed 400,000 AI models through PaddlePaddle, serving over 130,000 businesses and institutions across a wide range of sectors and industries”.

Intel collaborates with Baidu for its NNP-T, an AI processor

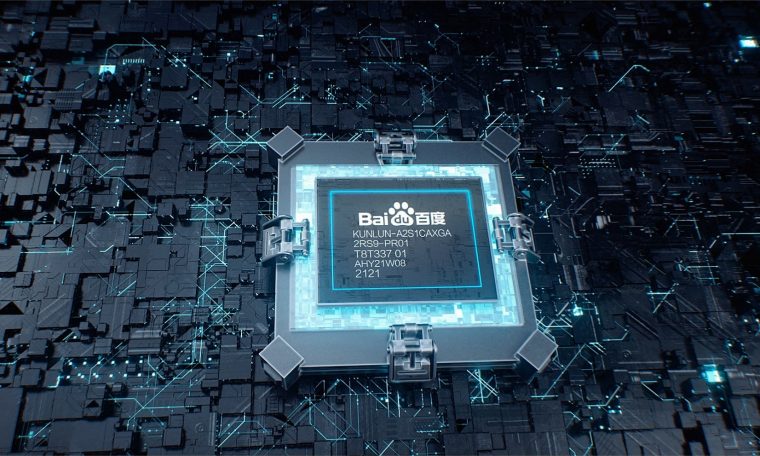

Kunlun II Demonstration

As you can see, Baidu doesn’t give very accurate values for Kunlun II. The company claims a performance increase of 2-3x over the first named Kunlun, an FPGA chip made by Samsung in 14nm. According to our colleagues at Tom’s Hardware US, it delivers 256 INT8 TOPS, 64 TOPS INT/FP16 and 16 INT/FP32 TOPS at 150 watts. From these numbers, they estimate that Kunlun II achieves 512 to 768 INT8 TOPS, 128 to 192 INT/FP16 TOPS, and 32 to 48 INT/FP32 TOPS. The table below compares Kunlun I and II as well as a solution that is a bit more familiar to us, NVIDIA A100, for INT8. On paper, Kunlun II seems to have some arguments when it comes to AI computation. Now, this is all quite theoretical, with software optimization also being an important factor.

| Processor | Baidu Kunlun | Baidu Kunlun II | nvidia a100 |

| INT8 | 256 Tops | 512~768 tops | 624/1248* TOPS |

| INT / FP16 | 64 Tops | 128~192 Tops | 312/624* TFLOPS (bfloat16/FP16 Tensor) |

| Tensor Float32 (TF32) | – | – | 156/312* TFLOPS |

| INT/FP32 | 16 tops | 32~48 Tops | 19.5 TFLOPS |

| FP64 (Tensor) | – | – | 19.5 TFLOPS |

| FP64 | – | – | 9,7 TFLOPS |

Source: Baiduhandjob Tom’s Hardware US